Click flooding detection and the false-positive challenge

Fraud is bad but false positives can be worse.

But are they?

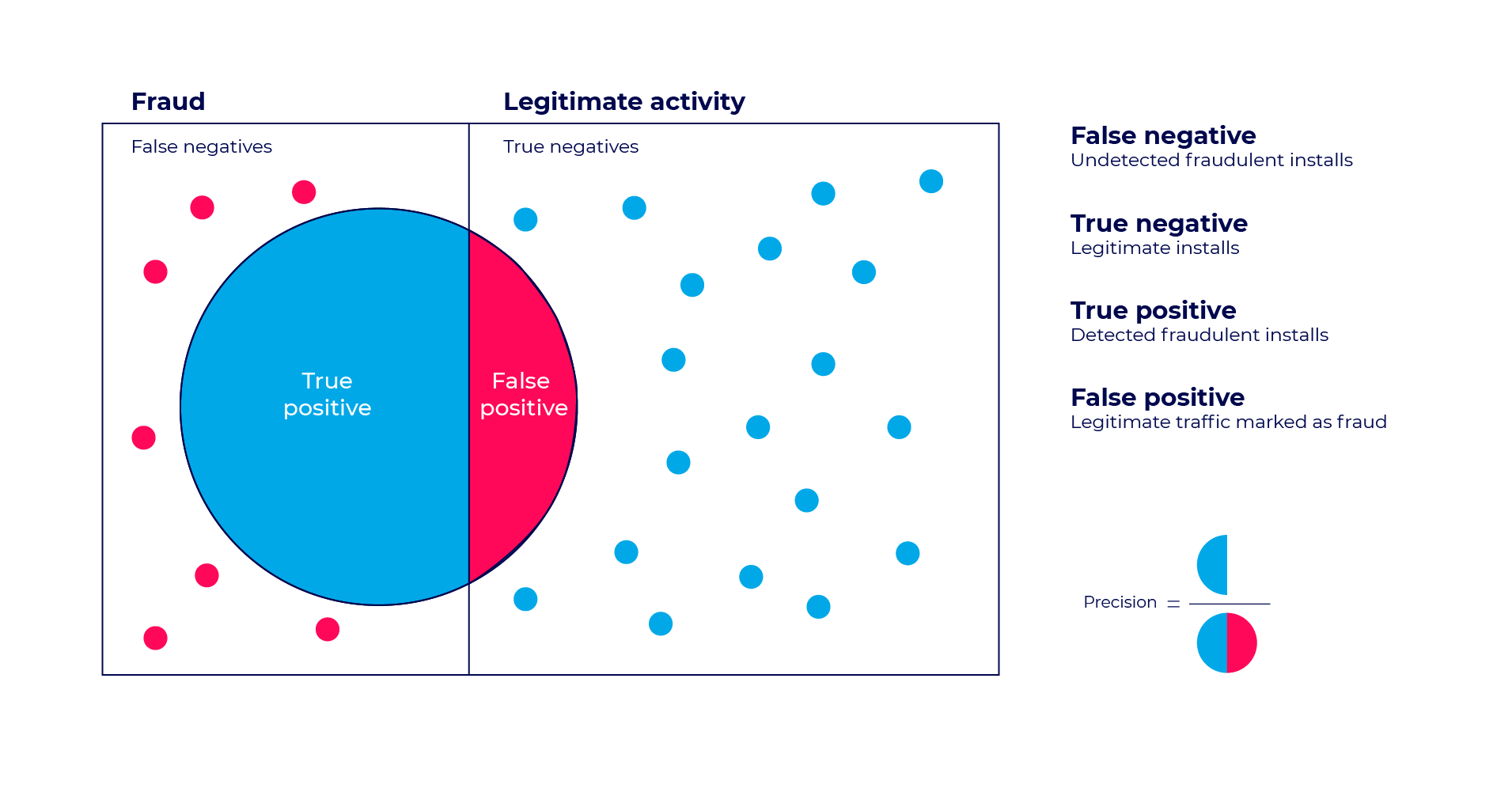

Unlike true positive cases, where real fraud is identified and blocked, a false positive case allegedly penalizes legitimate sources. This could potentially harm an advertiser’s relationship with its quality media partners rather than protect them from malicious ones.

Any responsible fraud solution should aim for the lowest false positive rate possible, in order to maintain its integrity and credibility, while protecting their client’s best interest.

Sounds like a simple thing to do, right?

Well… partially.

What is the False Positive test?

To understand the False Positive test and its repercussions we must first understand the concept of Precision and Recall – two highly important data analytics KPIs – particularly in fraud detection.

The false positive test sets the allowed precision level for our fraud detection algorithm. The higher precision level is – the more conservative the fraud detection becomes.

A lower precision rate, on the other hand, may result in an increase of total traffic detected, but at the cost of higher false positive rates.

Each fraud rule or machine learning algorithm eventually includes a false positive threshold that could be tuned higher or lower based on multiple variables and needs.

The key is finding the right balance between detecting as many mobile ad fraud incidents as possible, while maintaining a high enough precision level.

An opportunistic point of view

Most marketers act with some restraint and self reflection, which builds their credibility. Unfortunately, fraudsters will note this as an open opportunity.

We know that fraudsters are always on the lookout for loopholes to exploit in order to gain the occasional advantage over the market. This typically refers to technical loopholes but rest assured that ethical boundaries pose a very big opportunity as well.

It’s become common practice for fraudsters to examine the changes and updates made by fraud prevention vendors and optimize their activity to by-pass detection logic and thresholds.

A relatively simple loophole to exploit is taking advantage of the false positive test limitation, at which you will see fraudsters deliberately mixing legitimate installs into the fraud mix.

Don’t confuse this with an attempt to improve their traffic in any way, but rather look at this tactic at face value. The goal here is to whitewash poor quality traffic. Any legitimate traffic or install coming in is meant to be later used as a counterclaim whenever other, fraudulent, parts of their activity is blocked.

Hyper active devices

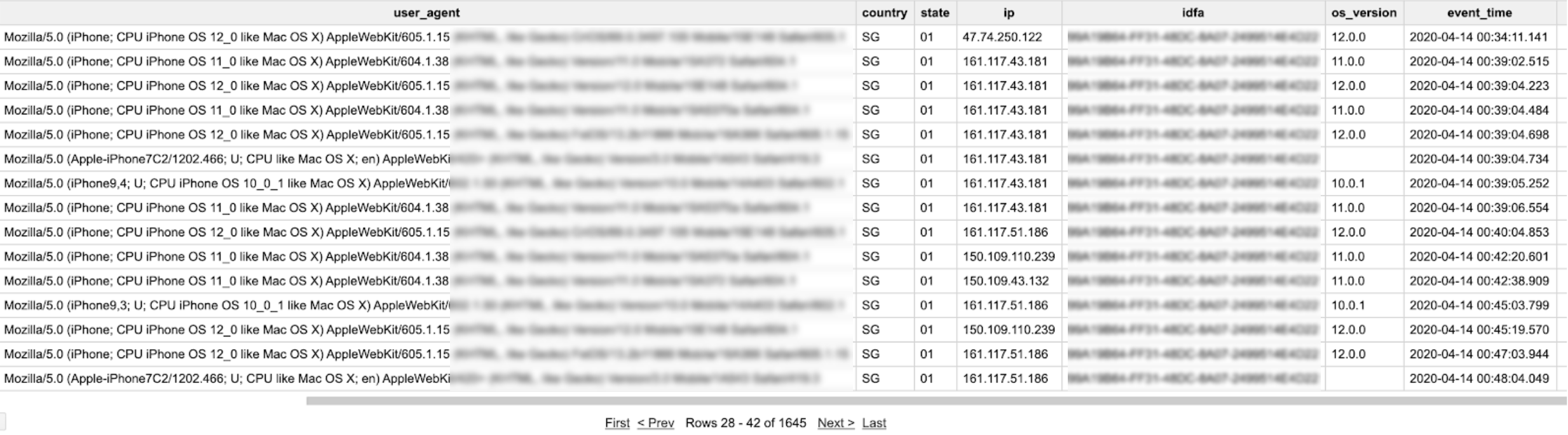

Click flooding can be generated by a single device, sending multiple (sometimes thousands) clicks a day. The example below displays identical IDFA for all reported actions – indicating that the same device is being used.

The event_time column displays that all clicks (1,645 total, as shown below the table) were reported on the same day. Repeating similar IPs can help identify that this is the same physical device. However, the OS version and User agent are not the same for all clicks. This means that someone forged these values artificially.

Can “legitimate” installs generated by such sources still be referred to as legitimate?

Click flooding, as a general tactic, relies on massive click volumes populated with real device details. However, these details are often obtained using illegitimate means, such as malware on user devices, or even purchased through the dark-net.

This means that even so called “legitimate” installs are often organic installs that the advertiser never should have paid for – these users went through the install process organically without ever encountering an ad.

What would be the right approach in this case? Should we block the IP from providing additional clicks or perhaps the IDFA from gaining credit for further attributions.

Whatever our solution is, it won’t be error-free, some risks have to be taken.

Flooding away

A different, more conservative tactic is based on statistical models that indicate chances of conversion per certain amount of clicks.

As a result, advertisers are bombarded by a host of clicks for the remote chance of them converting into an app install. Fraudsters could populate fake click information and communicate it to advertisers on behalf of users who have no idea that this is being carried out.

Another tactic is firing click URLs for every ad impression. Users may be exposed to real ads, but no ad clicks are actually made. A user is very likely to view the ad several times, thus increasing the chances for their “clicks” to convert. Once users eventually download the app, the fraudster wins attribution for an organic install.

Creating and delivering these fake clicks costs almost nothing for fraudsters or their operation, and they don’t mind the low conversion rates, as profitability is high.

Sneaking under the radar

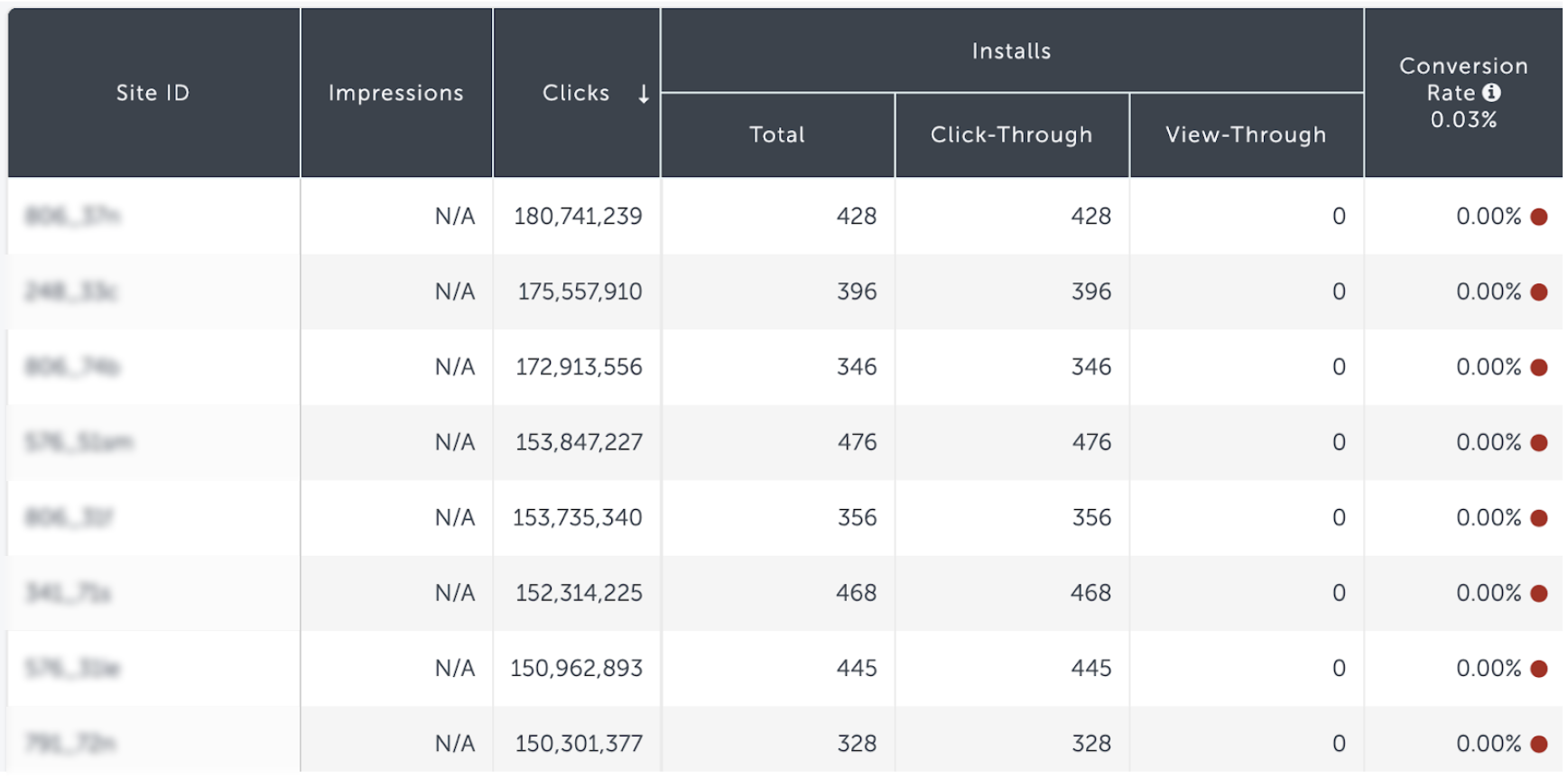

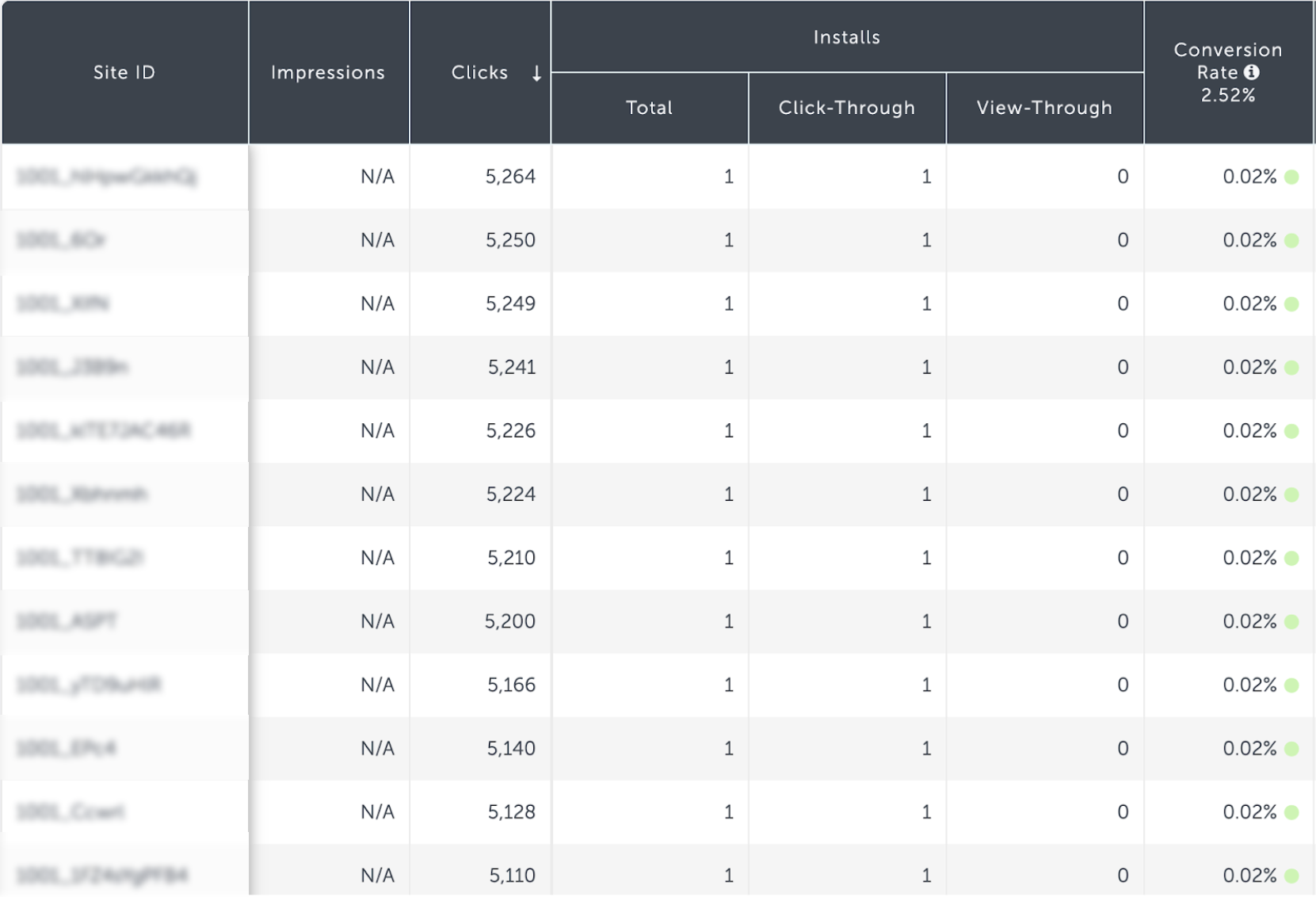

A similar, but more sophisticated method of click flooding is the break-up attempts of click floods into smaller sites – each associated with a lower number of clicks.

This could make each individual site seem “innocent” when examined independently.

However, when looking at the bigger picture this is no different than the example stated above.

The number of clicks associated with each of these sites is actually a great example of the BI carried out by fraudsters, to test anti-fraud thresholds, and optimize their traffic distribution towards a number that would keep them unsuspected below respective radars.

Most of these sites will present very low conversion rates; however, the ones that will convert will present better potential conversion rates and will be used as an optimization life-line, presenting these few “good” channels as a means to keep their activity on-going.

When media partners split a single site ID name into thousands of meaningless names – it reflects badly for AppsFlyer, but more so to the advertiser – making it harder for them to accurately measure and further improve successful campaigns. Or alternatively, ditch poor performing sources.

Considering that most of these sites will only have a few installs during their lifetime, studying their methods has become almost impossible.

This is where AppsFlyer’s post attribution fraud detection comes into play, as these low scale sites are almost impossible to block in real-time. A retrospective algorithm can trace back this activity and assign it to fraud trends that aren’t applicable to real time detection with high precision.

Some ad networks who gained a reputation of deliberately manipulating this parameter are treated with more extreme measures. Examining their traffic on a less granular level – meaning these small sites will be aggregated by their prefix name, as well as other similar parameters – they will be “judged” together and blocked in real time.

We’re aware that a few “legitimate” sites may be affected by these actions as they’re mixed into a cluster of fraudulent small sites. While we work hard on mitigating this edge case as much as possible, the logic shown above highlights just how needed these actions are.

An unforgiving approach

Does the above suggest that we’re abandoning the false positive test?

Of course not.

As mentioned earlier, the false positive test is crucial in order to maintain ecosystem integrity, and a reliable fraud protection mechanism. However, we’re not going to accept whitewashing and a cynical use of low scale “quality” traffic as an excuse to keep harmful sources active.

This is a great example of a case where we’re willing to lower our precision rate standards, become more permissive and block more fraudulent installs.

Click flood blocking (among other types of fraud) doesn’t happen instantaneously, it requires time, and carefully examine each and every publisher independently. To do so at scale, we’re constantly improving our cluster blocking mechanism – allowing fraud detection when insufficient information is gathered on the install level.

AppsFlyer will not tolerate abusive behavior from specific media sources that can be harmful both to the ecosystem, and more specifically to our customers.

Our goal is to catch fraudulent attempts from various sources earlier, faster, and more effectively.